In this assignment I was tasked with implementing a simple ray-tracer capable of rendering scenes of spheres lit by light sources.

In the previous homework, we implemented a parser for a simple scene file, the outline of the tracing code was already implemented. Additionally, the previous homework was written in c++.

I am not super fond of C++ for many reasons. For one, I think it’s bloated, each iteration of the C++ standard may be an improvement but keeping backwards compatibility creates about a million different ways to do the same thing. Furthermore, the c++ standard library is almost unreadable - I like to understand what the functions i’m calling do. Also, the compatibility with C introduces the familiar footguns of C (macros and more), with the additional footguns of C++. I could go on but I’ll spare your time.

For these reasons, I decided write the raytracer in zig⚡.

Some things that I like about zig:

- No hidden control flow.

- No hidden memory allocations.

- No preprocessor, no macros.

- C interop

Rant over.

To start, I reimplemented the scene parser in zig.

First we need a scene struct:

pub const Scene = struct {

// Camera properties

camera_pos: Vec3 = vec3.zero,

camera_fwd: Vec3 = Vec3{ 0, 0, -1 },

camera_up: Vec3 = Vec3{ 0, 1, 0 },

camera_right: Vec3 = Vec3{ -1, 0, 0 },

camera_fov_ha: f32 = 45,

film_resolution: struct { u16, u16 } = .{ 640, 480 },

output_image: [:0]const u8 = "raytraced.bmp",

spheres: std.ArrayList(Sphere),

background: Vec3 = Vec3{ 0, 0, 0 },

lights: std.ArrayList(Light),

max_depth: u16 = 5,

}

Oh, and our Vec3 type:

pub const Vec3 = @Vector(3, f64);

Then can build up our scene by looping over each line, switching on the command, and parsing the values.

switch (command.?) {

.camera_pos => scene.camera_pos = try parseVec3(vals),

.camera_fwd => scene.camera_fwd = try parseVec3(vals),

.camera_up => scene.camera_up = try parseVec3(vals),

.camera_fov_ha => scene.camera_fov_ha = try std.fmt.parseFloat(f32, vals),

...

Now that we have built our scene, we need to trace some rays. I copied the example in HW3 over to zig.

for (0..img_width) |i| {

for (0..img_height) |j| {

const f_i: f32 = @floatFromInt(i);

const f_j: f32 = @floatFromInt(j);

const u: f32 = f_half_w - @as(f32, @floatFromInt(img_width)) * (f_i + 0.5) * inv_img_width;

const v: f32 = f_half_h - @as(f32, @floatFromInt(img_height)) * (f_j + 0.5) * inv_img_height;

const p: Vec3 = s.camera_pos - vec3.splat(d) * s.camera_fwd + vec3.splat(u) * s.camera_right + vec3.splat(v) * s.camera_up;

const ray_dir: Vec3 = vec3.unit(p - s.camera_pos);

for (s.spheres.items) |sphere| {

const hit = raySphereIntersect(s.camera_pos, ray_dir, sphere);

const color = if (hit) Vec3{ 1, 1, 1 } else Vec3{ 0, 0, 0 };

output_img.setPixel(@intCast(i), @intCast(j), color);

}

}

}

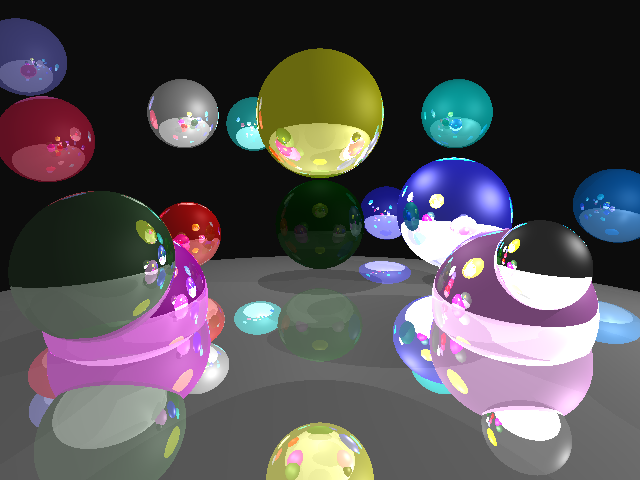

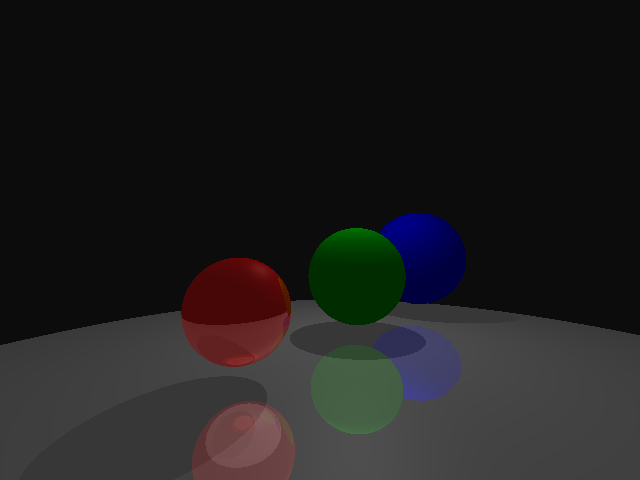

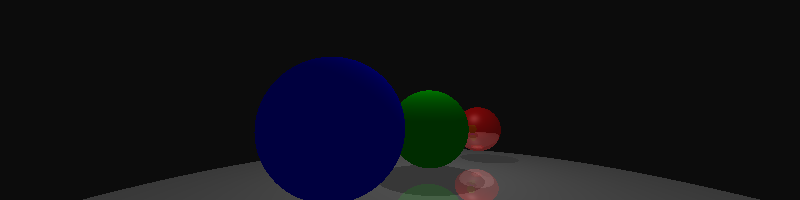

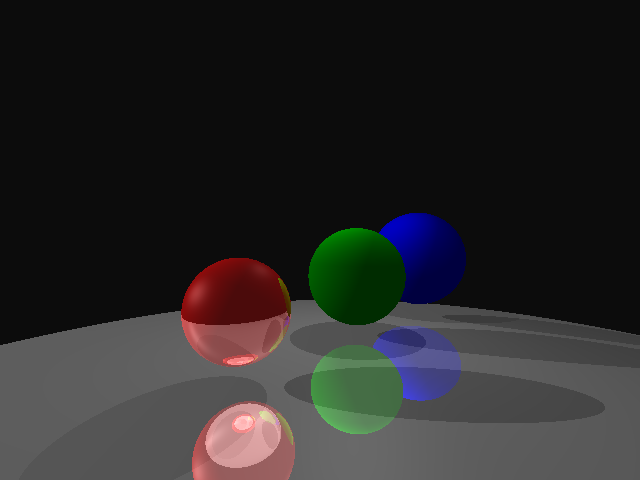

And we get some images!

Okay we are now at the same spot as HW3.

To receive full credit for this assignment I needed to complete 10 of the following, with the underlined being required.

Scene Setup:

- Camera placement, film resolution, aspect ratio

- User specified background colors

- UI + OpenGL output

- BMP or PNG output

Primitives:

- Spheres

- Difference/Intersection of spheres (Constructive Solid Geometry)

Lighting:

- Ambient lights

- Point light sources

- Shadows

- Multiple light sources

- Directional light sources

- Spot light sources

Sampling:

- Basic Sampling

- Jittered Supersampling

- Adaptive Supersampling

- Motion Blur

- Depth of Field

Materials:

- Color & Specularity (Phong Lighting Model)

- Refraction

- Reflection

We already have a few complete by copying the starter code from HW3.

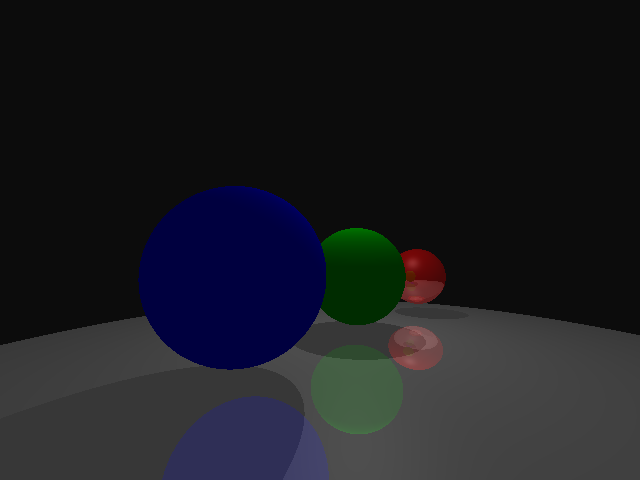

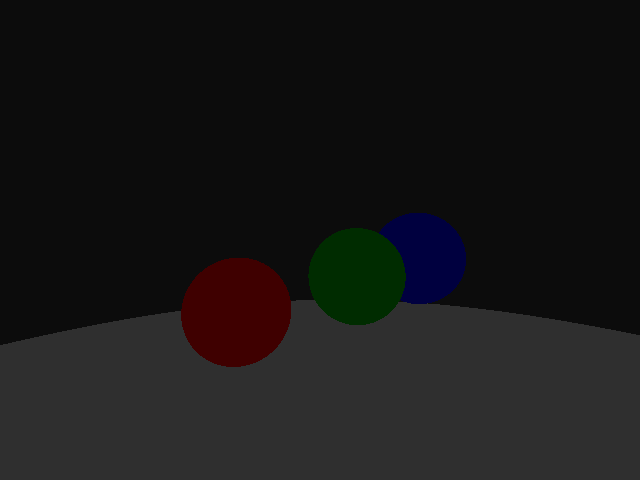

We can change the camera placement:

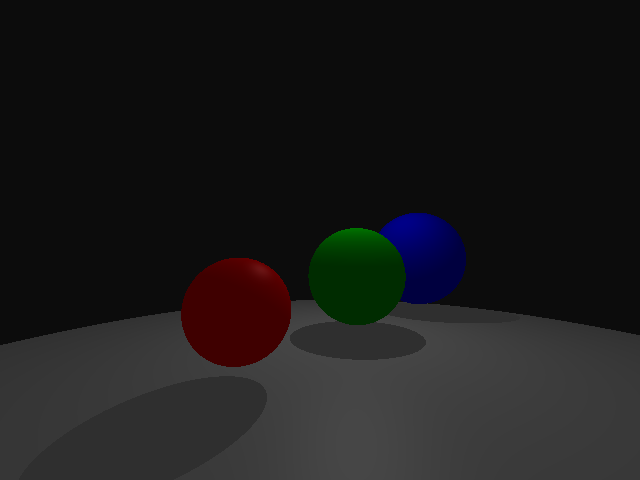

First I implemented ambient lighting:

pub const AmbientLight = struct {

intensity: Vec3,

pub fn illuminate(self: AmbientLight, ray: main.Ray, hit_record: main.HitRecord, scene: *const Scene) Vec3 {

_ = scene;

_ = ray;

return self.intensity * hit_record.material.ambient_color;

}

};

For each pixel, if we hit a sphere, the color of the sphere is the intensity of the ambient light multplied by the ambient color of the sphere. If we don’t hit a sphere we can set the background to the background color.

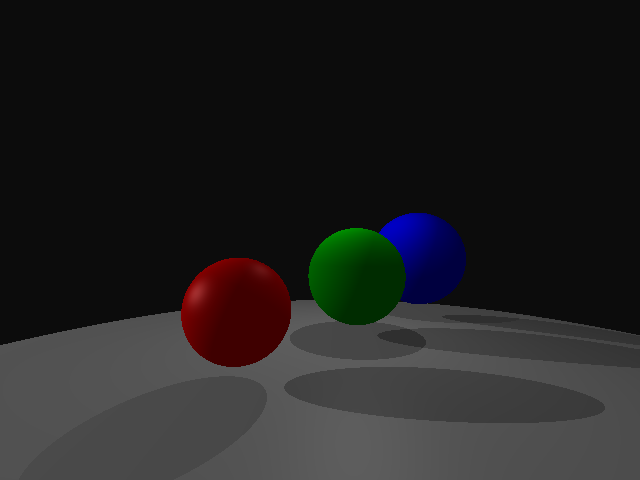

Then I added blinn-phong shading material and point lights.

For a point light the intensity of the light depends on the angle of incidence, the normal of the object, and the distance from the object to the light.

pub const PointLight = struct {

color: Vec3,

loc: Vec3,

pub fn illuminate(self: PointLight, ray: main.Ray, hit_record: main.HitRecord, scene: *const Scene) Vec3 {

_ = scene;

const n = hit_record.surface_normal;

const x = ray.eval(hit_record.distance);

const r = vec3.norm(self.loc - x);

const l = (self.loc - x) / vec3.splat(r);

const E = vec3.splat(@max(0, vec3.dot(n, l))) * self.color / vec3.splat(r * r);

const k = hit_record.material.evaluate(vec3.unit(l), vec3.unit(ray.point - x), n);

return E * k;

}

};

Each sphere contains a Material struct that defines how it interacts with light sources. The evaluate function implements a blinn-phong shading model.

const pi_inv: f64 = 1.0 / std.math.pi;

pub const Material = struct {

ambient_color: Vec3 = Vec3{ 0, 0, 0 },

diffuse_color: Vec3 = Vec3{ 1, 1, 1 },

specular_color: Vec3 = Vec3{ 0, 0, 0 },

specular_coefficient: f64 = 5,

transmissive_color: Vec3 = Vec3{ 0, 0, 0 },

index_of_refraction: f64 = 1,

pub fn evaluate(self: Material, l: Vec3, v: Vec3, n: Vec3) Vec3 {

const h = vec3.unit(l + v);

const diffuse = self.diffuse_color * vec3.splat(pi_inv);

const specular = self.specular_color * vec3.splat(std.math.pow(f64, @max(0, vec3.dot(n, h)), self.specular_coefficient));

return diffuse + specular;

}

}

Implementing shadows from here is pretty easy. We can check if reflection ray hits any objects. If it does we are in a shadow and we don’t illumnate.

const srec = scene.hit(.{ .point = x, .dir = l }, 0.002, r);

if (srec != null) {

// we hit an object on the way to the light so we in shadow

return vec3.zero;

} else {

const E = vec3.splat(@max(0, vec3.dot(n, l))) * self.color / vec3.splat(r * r);

const k = hit_record.material.evaluate(vec3.unit(l), vec3.unit(ray.point - x), n);

return E * k;

}

And we can do multiple light sources too by looping over each Light in the scene and adding the result of illuminate to the color.

for (scene.lights.items) |light| {

color += light.illuminate(ray, closest_hit);

}

Extra Credit

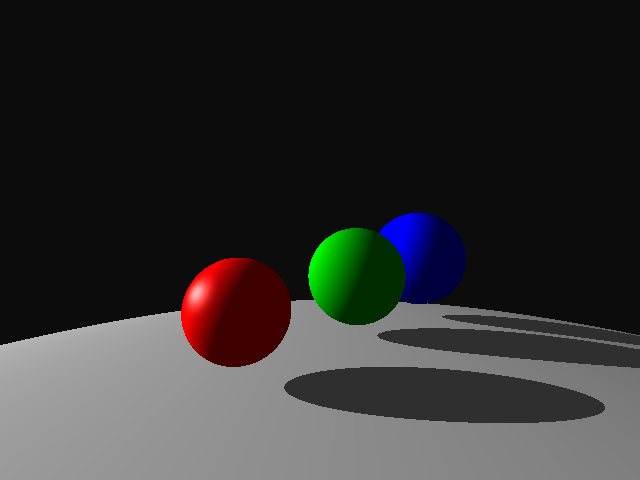

Directional Lights

Directional lights are like point lights, but their energy doesn’t follow the inverse square law and they emit light in a fixed direction instead of from a point

pub const DirectionalLight = struct {

color: Vec3,

direction: Vec3,

pub fn illuminate(self: DirectionalLight, ray: main.Ray, hit_record: main.HitRecord, scene: *const Scene) Vec3 {

const x = ray.eval(hit_record.distance);

// Here l = -direction where in point light l = vec3.unit(self.loc - x);

const l = vec3.unit(-self.direction);

const n = hit_record.surface_normal;

const srec = scene.hit(.{ .point = x, .dir = -self.direction }, 0.002, std.math.inf(f64));

if (srec != null) {

// we hit an object on the way to the light so we in shadow

return vec3.zero;

} else {

const E = self.color * vec3.splat(@max(0, vec3.dot(n, l)));

const v = vec3.unit(ray.point - x);

// k does not fall of by r*r

const k = hit_record.material.evaluate(vec3.unit(l), v, n);

return E * k;

}

}

}

Reflections

To add reflections we can add a recursive call to our shadeRay function.

pub fn shadeRay(self: Scene, ray: main.Ray, bounces: u16) Vec3 {

const hit_obj: ?main.HitRecord = self.hit(ray, 0, std.math.inf(f64));

var color: Vec3 = self.background;

// we got a hit

if (hit_obj != null) {

color = vec3.zero;

for (self.lights.items) |light| {

// Reflect

color += light.illuminate(ray, hit_obj.?, &self);

if (bounces > 0) {

const n = hit_obj.?.surface_normal;

const reflection = ray.dir - vec3.splat(2 * vec3.dot(ray.dir, n)) * n;

// bounce_point + eps * normal

const p = ray.eval(hit_obj.?.distance) + n * vec3.splat(0.001);

// Recurse adding on the reflected ray

color += hit_obj.?.material.specular_color * self.shadeRay(.{ .dir = reflection, .point = p }, bounces - 1);

}

}

}

return color;

}

Code

The code requires zig version 0.15.1 or greater and can be compiled with zig build -Doptimize=ReleaseFast

Then the executable can be run with ./zig-out/bin/project_3a MyScenes/camera_pos.txt

Additional Scenes